In little less than five years, OpenAI has become one of the leading AI research labs globally, alongside other AI players like Alphabet’s DeepMind, EleutherAI and SambaNova Systems. Its love for transformers is never-ending and indescribable.

OpenAI has been making headlines (for both right and wrong reasons) for its research work, particularly in the area of transformers, unsupervised, transfer learning, and the most obvious, GPT-3, or generative pre-trained transformer 3.

The Genesis

Two years ago, OpenAI published a blog post and paper on GPT-2. It was created as a direct scale-up of the 2018 GPT model. That changed everything for the company, where it released a small parameter (124 million) GPT-2 model in February 2019, followed by a staged/phased release of its medium 335 million model, and subsequent research with partners and the AI community into the model’s potential for misuse and societal benefit.

Since then, the craze for transformer models has grown significantly. For instance, Adam King launched ‘TalktoTransformer.com,’ giving people an interface to play with the newly released models. Meanwhile, Hugging Face released a conversational AI demo based on GPT-2 models and eventually decided not to release the large GPT-2 model due to ethical considerations.

In addition, the company has also released an auto text completion tool called Write With Transformer, a web app created and hosted by Hugging Face, showcasing the generative capabilities of several models, including GPT-2 and others. Also, researchers with the University of Washington and the Allen Institute for AI research revealed GROVER, a GPT-2-type language model. AI-assisted development workflow DeepTabNine built a code autocomplete based on GPT-2. In 2019, other research works based on GPT-2 included DLGNet and GLTR.

Some of the popular research papers published in the same year include ‘Reducing malicious use of synthetic media research: Considerations and potential release practices for machine learning,’ and ‘Hello. It’s GPT-2 – How can I help you? Towards the use of pre-trained language models for task-oriented dialogue systems.’

In August 2019, NVIDIA trained 8.3 billion parameter transformer model MegatronML, making it the largest transformer-based language model trained at 24x the size of BERT and 5.6x the size of GPT-2. In the same month, OpenAI released its larger parameter 774 million GPT-2 model. There was no stopping for them.

Enters GPT-3

In November 2019, OpenAI released a complete version of the GPT-2 model with 1.5 billion parameters. This was followed by the release of GPT-3 with 175 billion parameters in 2020, whose access was provided exclusively through an API offered by Microsoft. Other large scale transformer models include EleutherAI GPT-J, BAAI’s Wu Dao 2.0, Google’s Switch Transformer, and NVIDIA-Microsoft’s Megatron Turing Natural Language Generation (MT-NLG).

OpenAI was founded as a nonprofit AI research entity in 2015 by Sam Altman, Greg Brockman, Elon Musk, and others, who collectively invested $1 billion with a mission to develop artificial general intelligence (AGI). In 2019, Musk left OpenAI due to a difference of opinion. He had criticised OpenAI, arguing that the company should be more open.

Later, Microsoft invested about $1 billion in OpenAI and got exclusive access to GPT-3 source code. This move completely altered the foundation of OpenAI, moving away from openness and towards commercialisation and secrecy.

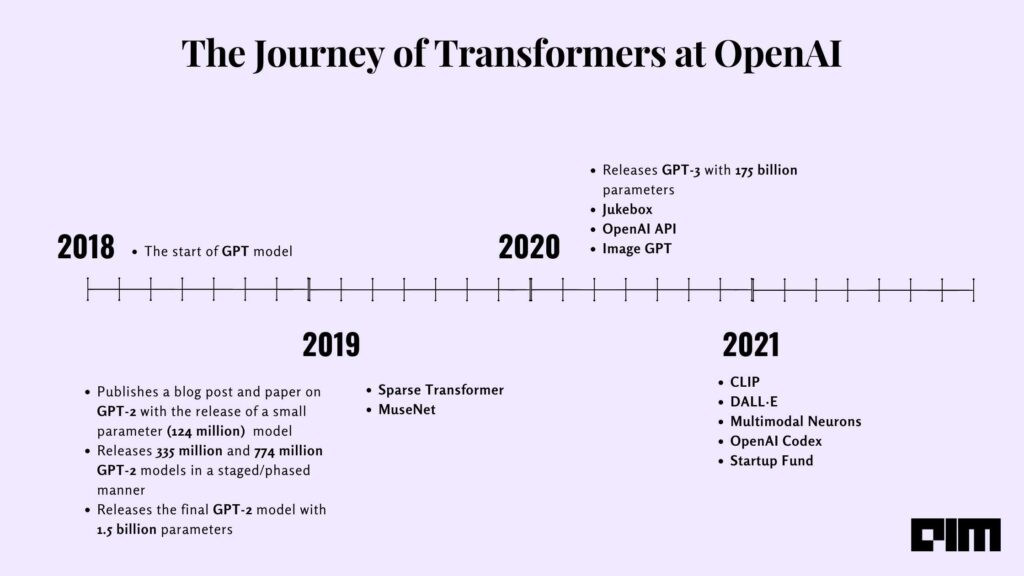

Here’s a complete timeline of OpenAI’s transformer language models in the last three years:

In a recent Q&A session, Altman spoke about the soon to be launched transformer model GPT-4, which is expected to have 100 trillion parameters — i.e., 500x the size of GPT-3. Altman also gave a sneak-peek into GPT-5 and said that it might pass the Turing test.

Besides this, OpenAI recently launched OpenAI Codex, an AI system that translates natural language into code. It is a descendant of GPT-3. The training data contains both natural language and billions of lines of source code from open-source platforms, including code in public GitHub repositories.

Final Thought

OpenAI’s GPT-3 model is one of the most talked-about models globally. That is because it has seen some real-world applications/use cases, including language understanding, machine translation, and time-series predictions, among others.

While plenty of new players are emerging in the space and creating large-scale language models using transformers and other innovative techniques – with GPT-4 and GPT-5 just around the corner – there is no stopping for OpenAI. The possibilities are immense. It is only going to get exciting from here on out.